Funders’ reporting and evaluation systems are rarely loved — they are more often regarded as compliance or “policing.” But not so for the Inter-American Foundation (IAF), an organization that funds grassroots development through community-based organizations in 20 countries of Latin America and the Caribbean. In fact, IAF received better feedback from its grantees on its reporting and evaluation system than have the approximately 300 other foundations for which the Center for Effective Philanthropy (CEP) has produced a Grantee Perception Report (GPR).

The GPR includes the question, “How helpful was participating in the foundation’s reporting/evaluation process in strengthening the organization/program funded by the grant?”

In both its GPRs in 2011 and 2014, IAF got the highest scores CEP has seen for this question — and comes top on this metric by some margin. Respondents can answer from 1 (“not at all helpful”) to 7 (“extremely helpful”). In 2014, IAF scored 6.00 on this question — the funders that rated second and third on the question scored 5.80 and 5.72.

What might explain this? Giving Evidence, which works to encourage charitable giving based on sound evidence, investigated. The result is a new case study, Frankly Speaking: The Inter-American Foundation’s Reporting Process: Lessons from A Positive Outlier.

As explained in an earlier post, IAF’s model is unusual in being more engaged with grantees than most funders are, visiting each several times and providing an evaluator who visits biannually to help set up a data system and verify the data which grantees submit. IAF’s system also has an unusually broad set of metrics, and the Foundation allows grantees a choice in which ones they use.

The reporting system is part of the intervention

The main finding from Giving Evidence’s research is that for IAF, the reporting and evaluation system is part of the intervention. Whilst that may sound obvious, it isn’t how funders typically conceptualize reporting and evaluation. More often, “the funder’s intervention” is the money and maybe some other support, while the reporting and evaluation piece is separate and designed to understand the effect of the intervention.

What grantees talk about when they talk about IAF’s reporting process

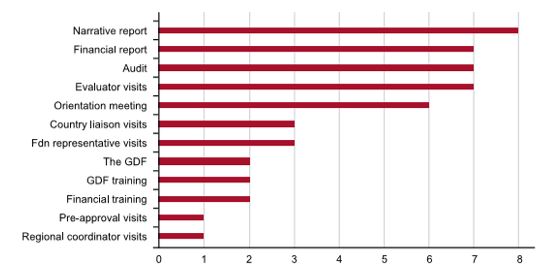

We needed to establish what grantees were referring to when they gave those high ratings in the GPR, so we asked interviewees to list what they saw as the components of the reporting process. Herein was the first surprise — the financial report and the financial audit were among the items most frequently listed. They’re not normally even considered part of reporting and evaluation.

Elements of IAF’s reporting process mentioned by grantees during interviews

What grantees value

In many ways, IAF’s reporting and evaluation system functions like a capacity-building program. It seems to give grantees four main benefits:

- Data

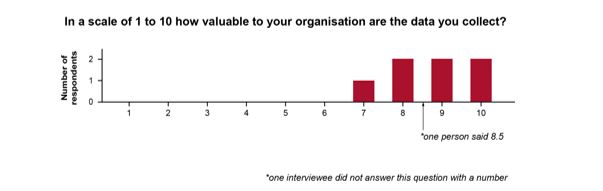

Many of the grantees said that they didn’t have a data management system at all before IAF’s involvement, and that the requirement to report — and the help getting a sensible data collection system — meant that they were now better able to make data-informed decisions. “Before, we would have gone without collecting these data. We did not think it was important. But today, yes, we would do it independently of a funder’s requirement,” one wrote. Every one of the nine grantees which Giving Evidence interviewed rated the system as useful:

- Capacity

Grantees learn to collect, handle, interpret, present, and use data. This is particularly important for the organizations with the least-developed skills in management and analysis, and who have not previously collected data at all.

- Confidence/Courage

Grantees gain confidence in their ability to collect data — and confidence that their data are accurate and complete. Some grantees find this useful in their dealings with other organizations, including other funders. This seems to be why the financial audit is the component of the reporting and evaluation system most highly valued by grantees.

- Credibility

Grantees establish credibility with their beneficiaries and communities, and with other organizations. Respondents used phrases like “accountability” and “transparency” frequently.

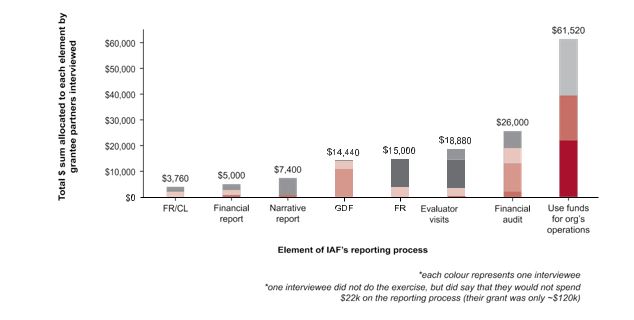

These benefits are most prized by grantees that are earlier on the learning curve. We led an exercise with interviewees in which they were given a notional $22,000 and could either spend it on the various parts of the reporting and evaluation process or could keep it for programs. Most (six of nine) said they would rather have the reporting and evaluation process than the equivalent money for their programs. Given the unpopularity of most funders’ reporting processes, this seemed remarkably high.

Allocation of dollars in the $22,000 exercise by grantees

The reporting framework and metrics themselves did not prove terribly popular or influential, but the evaluators’ visits were seen as integral to building grantees’ knowledge and skills — as the comments showed:

“[The evaluator’s] role is not to find errors. She is here to help us grow stronger and improve.”

“The recommendations made by the IAF, we also apply them to all our projects. It helps us improve our administrative systems. When the project ends, we will continue with these practices…”

“The evaluator’s … observations and criticisms… are useful for us to improve and see things that we don’t see on our own.”

Implications for IAF and other funders

A high-touch reporting and evaluation process may be useful when dealing with small grassroots organizations, as IAF does. Some grassroots organizations reported being so unskilled with data — which they demonstrated in our numerical exercises — that we would question the accuracy, meaning, or usefulness of data they report to funders should they not receive support. Conversely, organizations which are more sophisticated and already farther along the learning curve gain less from a high-touch process; some may need less support and some may need none. It may be wise to segment grantees with respect to the extent and type of support they need.

The full case study is published here. IAF and Giving Evidence hope that this is useful to other funders, and also encourages other funders to share data about their own performance and findings in order to improve the practice of grantmaking across the field.

Caroline Fiennes is director of Giving Evidence. Follow her on Twitter at @carolinefiennes.