We’ve all been there. The time when the foundation seems to forget the research project ever happened as soon as the final check is cut. The time when your report stuffed full of creative recommendations gets buried by risk-averse leadership. The time when the stakeholder group really does seem engaged by the findings, has lots of conversations, and then…nothing changes.

These stories happen with remarkable frequency. In fact, based on the evidence, there’s ample reason to believe they are the norm rather than the exception. Among more than 120 evaluation and program executives surveyed at private foundations in the U.S. and Canada, more than three-quarters had difficulty commissioning evaluations that result in meaningful insights for the field, their grantees, or the foundation itself, and 70 percent have found it challenging to incorporate evaluation results into the foundation’s future work. A survey of more than 1,600 civil servants in Pakistan and India found that “simply presenting evidence to policymakers doesn’t necessarily improve their decision-making,” with respondents indicating “that they had to make decisions too quickly to consult evidence and that they weren’t rewarded when they did.” No wonder Deloitte’s Reimagining Measurement initiative, which asked more than 125 social sector leaders what changes they most hoped to see in the next decade, identified “more effectively putting decision-making at the center” as the sector’s top priority.

This problem affects everyone working to make the world a better place, but it’s especially salient for those I call “knowledge providers”: researchers, evaluators, data scientists, forecasters, policy analysts, strategic planners, and more. It’s relevant not only to external consultants but also to internal staff whose primary role is analytical in nature. And if the trend continues, we can expect that it’s eventually going to catch up to professionals working in philanthropy. Why spend precious money and time seeking information, after all, if it’s unlikely to deliver any value to us?

Frustrating as this phenomenon may be, the reason for it is simple. All too often, we dive deep into a benchmarking report, evidence review, or policy analysis with only a shallow understanding of how the resulting information will be used. It’s not enough merely to have a general understanding of the stakeholder’s motivations for commissioning such a project. If we want this work to be useful, we have to anticipate the most important dilemmas they will face, determine what information would be most helpful in resolving those dilemmas, and then explicitly design any analysis strategy around meeting those information needs. And if we really want our work to be useful, we have to continue supporting decision makers after the final report is delivered, working hand in hand with them to ensure any choices made take into account not only the newly available information but also other important considerations such as their values, goals, perceived obligations, and existing assets.

In short, knowledge providers need to be problem solvers first, analysts second.

Decision-Driven Data Making

So what should you do if, say, a foundation wants to commission an evaluation of one of its programs but doesn’t seem to be able to articulate how the results will inform its future plans? Or if you’re tasked with creating a data dashboard for your organization on faith that the included metrics will someday somehow inform something?

The first and most important step is to interrupt the cycle as soon as possible. The further you get toward completing a knowledge product without having the conversation about how it will be used, the more likely you and your collaborators will find yourselves at the end struggling to justify the value of your work. It’s much better to have clarity about decision applications from the very beginning — not only because it helps solidify the connection between the information and the decision in the decision maker’s mind, but also because it will help you design the information-gathering process in a way that will be maximally relevant and useful.

For instance, in the case of the evaluation example above, there are probably a couple of decisions the evaluation could inform. Should the program be renewed, revised, expanded, or sunset? Does the strategy underlying the program’s design need to be adjusted? And if so, how? It’s important to recognize that these decisions, like all decisions, must consider not only what’s happened to date but what we should expect to happen going forward — and what other options may or may not be available to accomplish the organization’s goals.

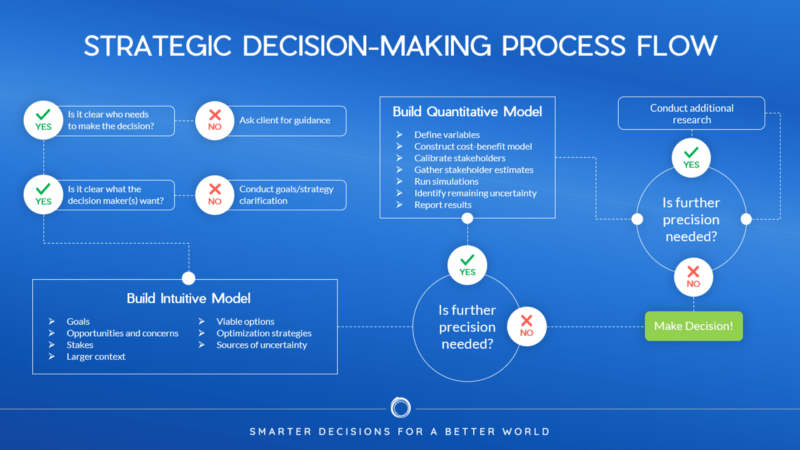

A good decision consultant will bring these and similar questions to the surface of the conversation before any analytical work gets off the ground. A great decision consultant will go further and engage the client in “rehearsing” the decision-making process at the outset of the project, while leaving room for judgments to change in response to new information. The framework created through this process can then be used to make the actual decision once the evaluation is complete and the findings have been presented to the client.

This method works because it radically reframes the purpose of gathering information; no longer is it a generalized (and unfocused) exploration to satisfy the stakeholder’s curiosity, but rather a strategy for reducing uncertainty about a specific, important decision. Rehearsing the decision in advance is an important step because it is very difficult to pick out which factors have the most potential to sway the decision one way or another using our intuitions alone (what I call “eyeballing” the decision). Only by trying to make the decision without all the information can we determine what information we actually need. The good news is that, once the most meaningful sources of uncertainty have been identified, the knowledge provider can then design the analysis in a way that is virtually guaranteed to yield actionable insights.

The “Wraparound” Model of Decision Support

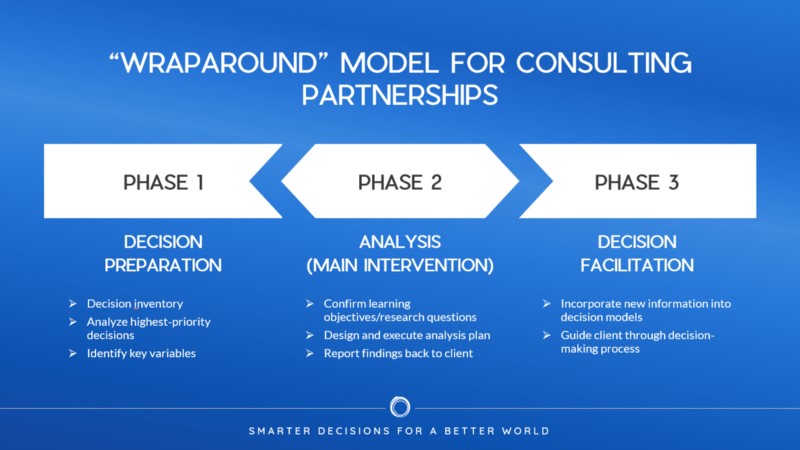

Knowledge providers who want to see their work have greater impact might find value in partnering with a decision consultant. I call the general model I propose here a “wraparound” service for knowledge initiatives.

The wraparound service has three phases, as shown in the diagram below. In the initial phase, the decision consultant works with the client to create an inventory of upcoming decisions that might be informed by the knowledge work and sorts those decisions into categories by stakes. Then, as described above, the client “rehearses” the highest-stakes decisions by analyzing them in advance. The goal is not to actually make the decision at this stage, but rather to set up the framework the client will eventually use to make that decision. Depending on the client’s resources and comfort with numbers, this might involve a simple, purely qualitative approach to understanding the decision or the creation of a more complex formal model. The decision consultant facilitates this process with the knowledge provider present so that the latter can be in the loop as the client identifies key sources of uncertainty.

After the initial framing, the analysis — still the centerpiece of the project — gets underway. The decision consultant serves as a thought partner at the beginning and end of this phase, contributing suggestions and feedback on research questions, analysis methods, and data collection instruments as appropriate to help ensure alignment with the client’s learning objectives. Otherwise, the knowledge provider owns this work and can proceed as it normally would, including offering key takeaways or recommendations to the client.

A normal engagement for a knowledge provider would close out there, with crossed fingers that the end product will have a useful life. With the wraparound service, by contrast, the decision consultant comes back onto the stage for one final act: helping to make the actual decisions. The client, decision consultant, and knowledge provider all convene to make sense of the new information in context with the work that was previously done to analyze each decision, tweaking assumptions and adding new options as appropriate to the context. Then, the client makes the final choice with the decision expert and knowledge provider on hand to answer any questions that come up at the last minute.

Moving Forward Together

Adding a decision support offering to your work is a great way to add value for your clients or employer. It’s important to note, however, that this intervention is most impactful when it can directly inform the planning of knowledge-gathering efforts; therefore, the earlier the decision expert can be brought into the process — ideally, while the project is still in the scoping stage — the more useful they can be.

Not all decisions are rocket science. Sometimes you may get lucky — the implications of, say, a literature review or feasibility study will be so obvious that they don’t require any deeper consideration once the analysis is complete. But banking on that luck is a foolish proposition. How many times have you run into scenarios where, for example:

- It isn’t clear whether a proposed change is worthwhile because the evidence for the benefit of the new strategy or tactic is weak or inconclusive?

- A proposed change makes sense assuming that the underlying environment remains stable, but upcoming or potential shifts in the environment throw that assumption into question?

- A proposed change appears to be beneficial on its own, but carries other implications for the organization and/or other entities that may make the overall impact negative?

In situations like these, a facilitated decision analysis can help stakeholders assess the wisdom of proceeding with the change while thoughtfully considering the alternatives.

Ian David Moss is CEO of Knowledge Empower. Follow him on Twitter at @IanDavidMoss.